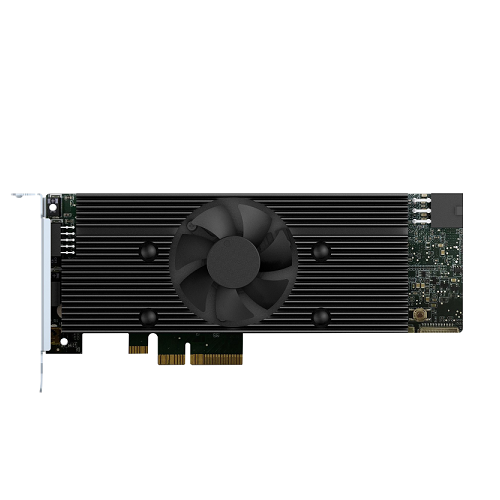

QNAP Computing Accelerator Card with 8 x Movidius Myriad X MA2485 VPU, PCIe gen2 x4 interface, RoHS – Mustang-V100-MX8-R10

For The Immediate delivery contact the sales team. Usually, Ship in 2-3 days, backorder ship in 4-5 Weeks, images are for illustration purposes only.

Call for Price

QNAP Computing Accelerator Card with 8 x Movidius Myriad X MA2485 VPU, PCIe gen2 x4 interface, RoHS – Mustang-V100-MX8-R10

Mustang-V100 (VPU)

Overview

Features

As QNAP NAS evolves to support a wider range of applications (including surveillance, virtualization, and AI) you not only need more storage space on your NAS, but also require the NAS to have greater power to optimize targeted workloads. The Mustang-V100 is a PCIe-based accelerator card using an Intel® Movidius™ VPU that drives the demanding workloads of modern computer vision and AI applications. It can be installed in a PC or compatible QNAP NAS to boost performance as a perfect choice for AI deep learning inference workloads. >> Difference between Mustang-F100 and Mustang-V100.

- Half-height, half-length, single-slot compact size.

- Low power consumption, approximate 2.5W for each Intel® Movidius™ Myriad™ X VPU.

- Supported OpenVINO™ toolkit, AI edge computing ready device.

- Eight Intel® Movidius™ Myriad™ X VPU can execute eight topologies simultaneously.

OpenVINO™ toolkit

OpenVINO™ toolkit is based on convolutional neural networks (CNN), the toolkit extends workloads across Intel® hardware and maximizes performance.

It can optimize pre-trained deep learning model such as Caffe, MXNET, Tensorflow into IR binary file then execute the inference engine across Intel®-hardware heterogeneously such as CPU, GPU, Intel® Movidius™ Neural Compute Stick, and FPGA.

Get deep learning acceleration on Intel-based Server/PC

You can insert the Mustang-V100 into a PC/workstation running Linux® (Ubuntu®) to acquire computational acceleration for optimal application performance such as deep learning inference, video streaming, and data center. As an ideal acceleration solution for real-time AI inference, the Mustang-V100 can also work with Intel® OpenVINO™ toolkit to optimize inference workloads for image classification and computer vision.

- Operating Systems

Ubuntu 16.04.3 LTS 64-bit, CentOS 7.4 64-bit, Windows 10 (More OS are coming soon) - OpenVINO™ Toolkit

- Intel® Deep Learning Deployment Toolkit

- – Model Optimizer

- – Inference Engine

- Optimized computer vision libraries

- Intel® Media SDK

*OpenCL™ graphics drivers and runtimes. - Current Supported Topologies: AlexNet, GoogleNet V1, Yolo Tiny V1 & V2, Yolo V2, SSD300, ResNet-18, Faster-RCNN. (more variants are coming soon)

- Intel® Deep Learning Deployment Toolkit

- High flexibility, Mustang-V100-MX8 develop on OpenVINO™ toolkit structure which allows trained data such as Caffe, TensorFlow, and MXNet to execute on it after convert to optimized IR.

*OpenCL™ is the trademark of Apple Inc. used by permission by Khronos

QNAP NAS as an Inference Server

OpenVINO™ toolkit extends workloads across Intel® hardware (including accelerators) and maximizes performance. When used with QNAP’s OpenVINO™ Workflow Consolidation Tool, the Intel®-based QNAP NAS presents an ideal Inference Server that assists organizations in quickly building an inference system. Providing a model optimizer and inference engine, the OpenVINO™ toolkit is easy to use and flexible for high-performance, low-latency computer vision that improves deep learning inference. AI developers can deploy trained models on a QNAP NAS for inference, and install the Mustang-V100 to achieve optimal performance for running inference.

Learn More: OpenVINO™ Workflow Consolidation Tool

Note: QTS 4.4.0 (or later) and OWCT v1.1.0 are required for the QNAP NAS.

Easy-to-manage Inference Engine with QNAP OWCT

Note:

1. Check Compatibility List for supported models.

2. Installing “Mustang Card User Driver” in the QTS App Center is required.

Applications

Dimensions

Note:

Up to 16 cards can be supported with operating systems other than QTS; QNAP TS-2888X NAS supports up to 8 cards. Please assign a card ID number (from 0 to 15) to the Mustang-V100 by using rotary switch manually. The card ID number assigned here will be shown on the LED display of the card after power-up.

Short Tech Specification

| Computing Accelerator Card with 8 x Movidius Myriad X MA2485 VPU, PCIe gen2 x4 interface, RoHS |

Detailed Tech Specification

Hardware Specs

Mustang-V100-MX8-R10

Model |

Mustang-V100-MX8-R10 |

| Main Chip | Eight Intel ® Movidius™ Myriad™ X MA2485 VPU |

| Operating System | Ubuntu 16.04.3 LTS 64bit, CentOS 7.4 64bit (Support Windows 10 in the end of 2018 & more OS are coming soon) |

| Operating Humidity | 5% ~ 90% |

| Dataplane Interface | PCI Express x4 Compliant with PCI Express Specification V2.0 |

| Power Consumption (W) | <30W |

| Operating Temperature | 5°C~55°C (ambient temperature) |

| Cooling | Active fan |

| Dimensions | Half-Height, Half-Length, Single-slot PCIe |

| Power Connector | *Preserved PCIe 6-pin 12V external power |

| Dip Switch/LED indicator | Identify card number |

Reviews

There are no reviews yet.